Technology has become an inseparable part of our everyday life. 90 percent of data was created in the last two years and the consumer expectations keep growing each year. As tech companies can’t keep up with the paste, they rely more on learning algorithms to make things easier. These technologies are often associated with artificial intelligence, machine learning, deep learning, and neural networks, whether through search engines, object recognition in photos on your social media feed, or voice controlled ‘smart’ assistants. Still, there is some confusion about what these terms mean and their differences. In this blog post, you will find an easily digestible definition of the three terms that will likely help you how to differentiate between in a better fashion.

Machine Learning

Machine Learning (ML)– is considered a branch of artificial intelligence (AI) and computer science devoted to understanding and building methods that leverage data to improve performance on some tasks, which may be described as learning. Via data and algorithms, it can imitate how humans learn, gradually improving its accuracy. It focuses on training machines on historical data to process future inputs based on learned patterns. Based on the training data, machine learning algorithms then build a model to predict or make decisions without explicit programming, meaning without manually written instructions for a system to do an action. A subset of machine learning is closely related to computational statistics, which focuses on making predictions using computers, but not all machine learning is statistical learning.

Use cases: Machine learning algorithms are used in various applications, such as in medicine, e-mail filtering, speech recognition, and computer vision, where it is difficult to develop conventional algorithms to perform the needed tasks.

Acceleration of digital initiatives

Neural Networks: Also known as artificial neural networks (ANNs), are a subcategory of machine learning. Loosely based on how neurons signal each other within the human brain, the neural net consists of multiple (up to millions) processing nodes that are densely interconnected and organized into node layers. Each network is built off an input layer, at least one hidden layer, and an output layer. An individual node might be connected to several nodes in the layer beneath it, from which it receives data, and several nodes in the layer above it, to which it sends data. Just like in the human brain, signals travel from the input layer to the last layer (the output layer), possibly after traversing the layers multiple times.

The “signal” at a connection is a real number, and the output of each neuron is computed by some non-linear function of the sum of its inputs. The connections are called edges. Neurons and edges typically have a weight that adjusts as learning proceeds. The weight increases or decreases the strength of the signal at a connection. Neurons may have a threshold such that a signal is sent only if the aggregate signal crosses that threshold. Typically, neurons are aggregated into layers. Different layers may perform different transformations on their inputs.

A huge volume of information needs to feed to the ANNs in order for them to learn. For example, if your goal is to teach Artificial Neural Network to differentiate between two objects (A and B), the training set (the initial information the ANNs learn on) would need to contain thousands of images tagged as ‘object A’ so the network could start learning. Once it has been trained with enough volume of data, it will try to classify future inputs based on what it thinks it’s seeing (or hearing, depending on the data set) throughout the different units. During the training period, the machine’s output is compared to the description provided by humans. The machine is validated when the human description and what the machine thinks it observes overlap. If it’s incorrect, it goes back through the layers to adjust the mathematical equation.

Use cases: Vehicle control, trajectory prediction, process control, general game playing, pattern recognition, radar systems, face identification, signal classification, object recognition and more, gesture, speech, handwritten and printed text recognition, automated trading systems), machine translation, social network filtering, e-mail spam filtering and more.

Artificial Intelligence

is the broadest term of the three; it refers to a part of computer science that aims to make machines think and act the way humans do, which includes complex tasks such as observing, learning, planning, and making decisions for problem-solving. It is also used to predict, automate, and optimize tasks that humans have historically done, such as speech and facial recognition, decision making, and translation.

Use cases: Advanced web search engines, recommendation systems, speech recognition such as Siri and Alexa, self-driving cars, automated decision-making, strategic games like chess, and more.

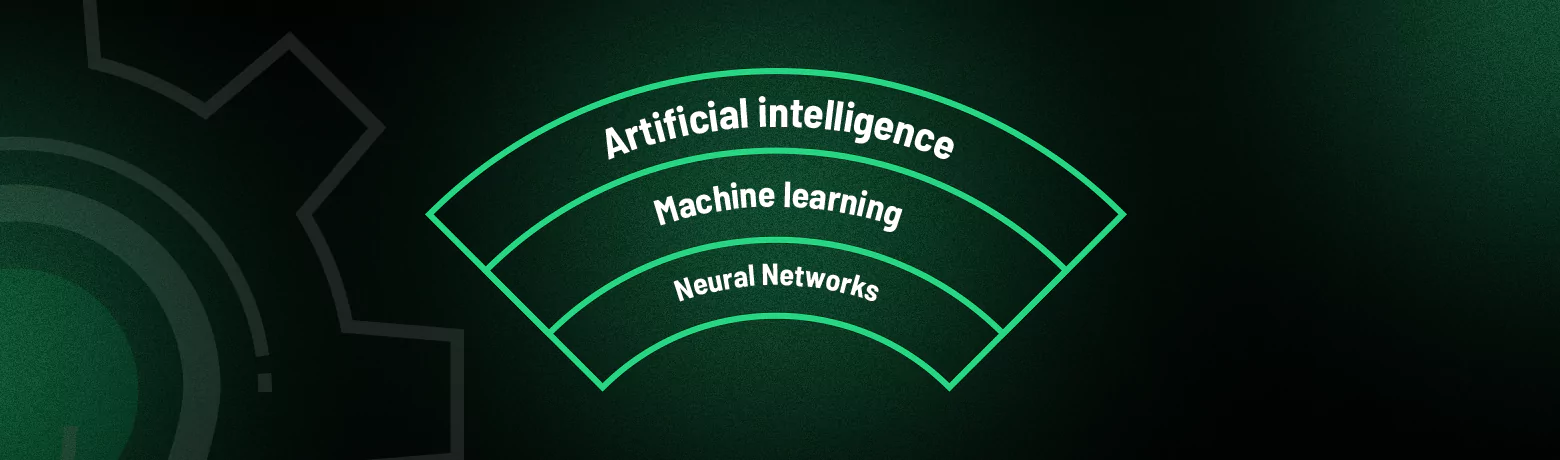

How do artificial intelligence, machine learning, and neural networks overlap?

Machine learning is a part of artificial intelligence. Deep learning is a subfield of machine learning, and neural networks are the core of deep learning algorithms. The number of node layers, or depth, of neural networks is what distinguishes a single neural network from a deep learning algorithm.